April 24 and 25, 2024

BLOG POST 2

Tony Alves, SVP of Product Management, attended the STM US Annual Conference in Washington, DC, April 24 and 25, 2024. The meeting centered on the transformative impact of artificial intelligence and digital technologies on the landscape of scholarly publishing and communication. Key themes included the integration of AI as a core component of scholarly practices, enhancing collaboration between humans and machines, and addressing the infrastructural needs of the digital transformation in publishing. Discussions explored the ethical, transparency, and trust challenges posed by AI, emphasizing the need for maintaining integrity within the scholarly community amidst technological advancements. The conference also highlighted the evolving roles within research offices, libraries, and editorial practices, reflecting on how AI could optimize workflows, enhance data management, and potentially reshape future research dissemination. The sessions collectively underscored a proactive approach towards embracing technological innovations while safeguarding the core values and reliability of scholarly outputs.

Tony’s report is in three parts. In this second installment he examines how the various players active in the research endeavor look at, and are preparing for, the future of scholarly communication. Included are the voices of the Research Office, a STEM Researcher, a Social Science Researcher, and a Research Librarian.

Research Offices of the Future

Special Report: Research Offices of the Future

Nandita Quaderi, SVP and Editor-in-Chief, Web of Science, Clarivate

Focus: Examining how AI and digital transformations are reshaping research offices, focusing on funding acquisition, research evaluation, and administrative efficiencies.

Key Points:

- Insights from a global survey highlighting the increasing reliance on AI for various administrative and evaluative processes in research offices.

- Potential challenges and opportunities presented by AI, including its impact on employment and research integrity.

A comprehensive survey conducted by “Research Professional News” examines the shifting dynamics within research offices globally, providing a detailed look at the evolving priorities, challenges, and the integration of AI in these crucial academic sectors. The survey draws on insights from 50 interviews with key stakeholders in scholarly publishing and is further enriched by feedback from several dozen commentators and advisors.

The key findings of the survey reveal that the main focus of research offices is increasingly on obtaining more funding, which 74% of respondents cited as their primary goal. This is closely followed by demonstrating research impact (46%) and improving research quality (44%). These priorities are driven by a trio of change drivers: cost pressures (56%), the need to demonstrate research impact (48%), and the demands of research assessment exercises (43%). Interestingly, despite the significant role of AI in other sectors, only 25% of the research offices surveyed see AI as a major change driver currently, though there is recognition that its relevance is likely to increase.

In terms of measuring research impact, traditional metrics like publications (64%) and citations (49%) are still predominant. However, there is a noticeable shift towards valuing societal benefits (39%), which is anticipated to rise to 56% in five years, reflecting a broader trend towards recognizing diverse research outcomes. This aligns with increasing attention to EDI/DEI initiatives and Sustainable Development Goals (SDGs), both of which are seen as growing in importance for research assessments.

The expectations from researchers themselves underscore these findings. The majority of researchers (73%) expect research offices to primarily facilitate access to funding opportunities, with 70% also seeking support with research proposals and bids. However, there appears to be less demand for assistance with compliance with open access mandates (35%) or with measuring and reporting impact (18%). This indicates a potential misalignment between researcher needs and the services provided by research offices.

The application of AI within research offices presents both opportunities and challenges. AI’s potential benefits are recognized in areas such as compiling information for grant applications (57%), analyzing reasons for unsuccessful grants to improve future success rates (53.1%), and managing internal databases (39.2%). However, concerns remain about AI’s implications for employment within research offices, with some staff fearing job losses due to automation and process efficiencies.

On the topic of research integrity, the survey highlights significant concerns among office staff. The pressure to publish was identified by 63% as a major threat to research integrity, followed by insecure employment practices (38%) and cultural issues such as bullying (37%). Despite these concerns, less than half of the institutions surveyed are actively investigating complaints, though a majority are developing research integrity policies (65%) and offering training (64%).

Looking ahead, research offices anticipate that their top priorities will remain consistent over the next five years, focusing on obtaining funding, demonstrating research quality, and improving research quality. However, the types of impact metrics they expect to measure are likely to evolve, with societal benefits, EDI/DEI, and SDGs becoming more significant. This shift represents both a challenge and an opportunity for research offices to adapt their strategies to effectively measure and report on these broader and more complex forms of research impact.

Overall, the survey paints a picture of research offices that are navigating a complex and rapidly changing landscape, where aligning with researcher expectations, integrating new technologies like AI, and adapting to new impact metrics are crucial for future success.

The STEM researcher: A view from the future

Heather Whitney, Research Assistant Professor, Radiology, University of Chicago

Focus: Addressing the challenges and necessities for developing advanced communication systems to support collaborative research and publication in STEM fields.

Key Points:

- Discussion on the friction points in current scientific publishing processes and how AI can alleviate these issues.

- The need for systems that support more effective data management and manuscript processing.

Researchers across various fields are expressing a clear need for more frictionless communication systems to alleviate the many challenges they face in publishing and collaboration. They hope for systems that not only facilitate smoother interactions but also enable them to contribute actively to developing these solutions. The main concern for researchers remains the meaningful integration of text and data, with publications and citation impact cited as their primary measures of success.

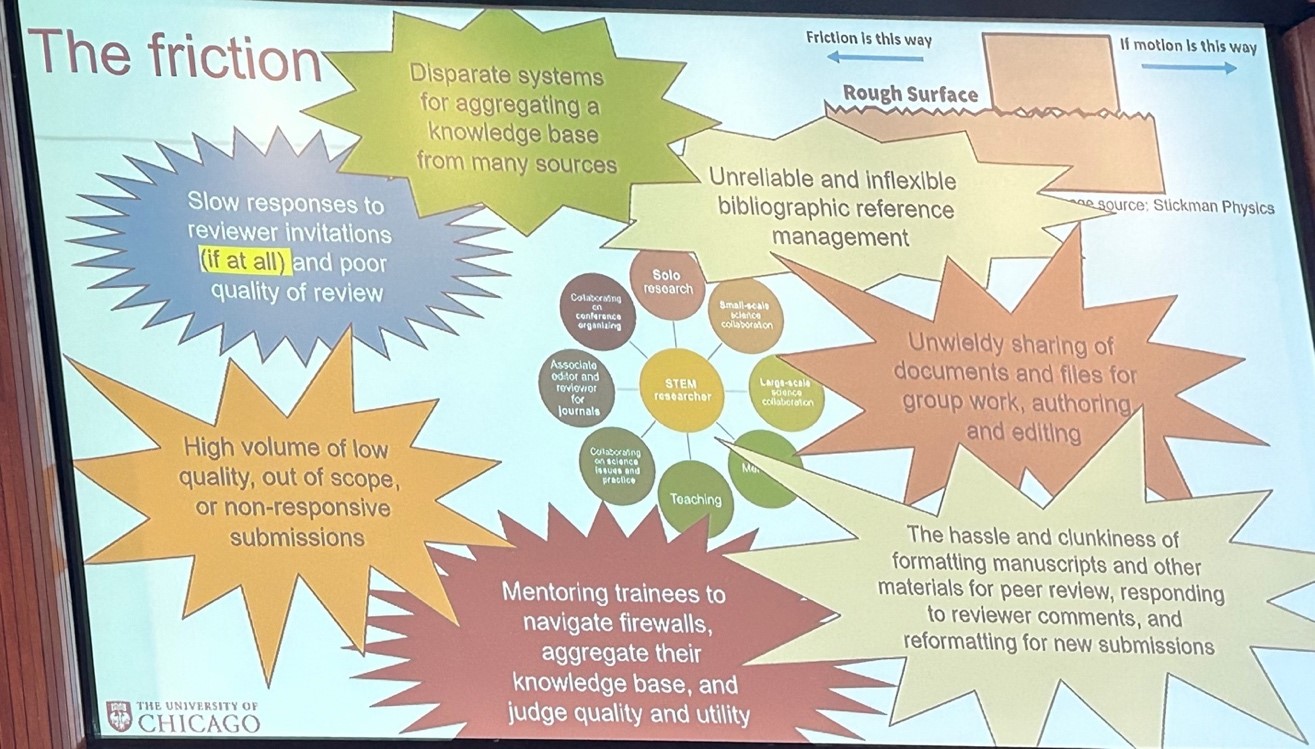

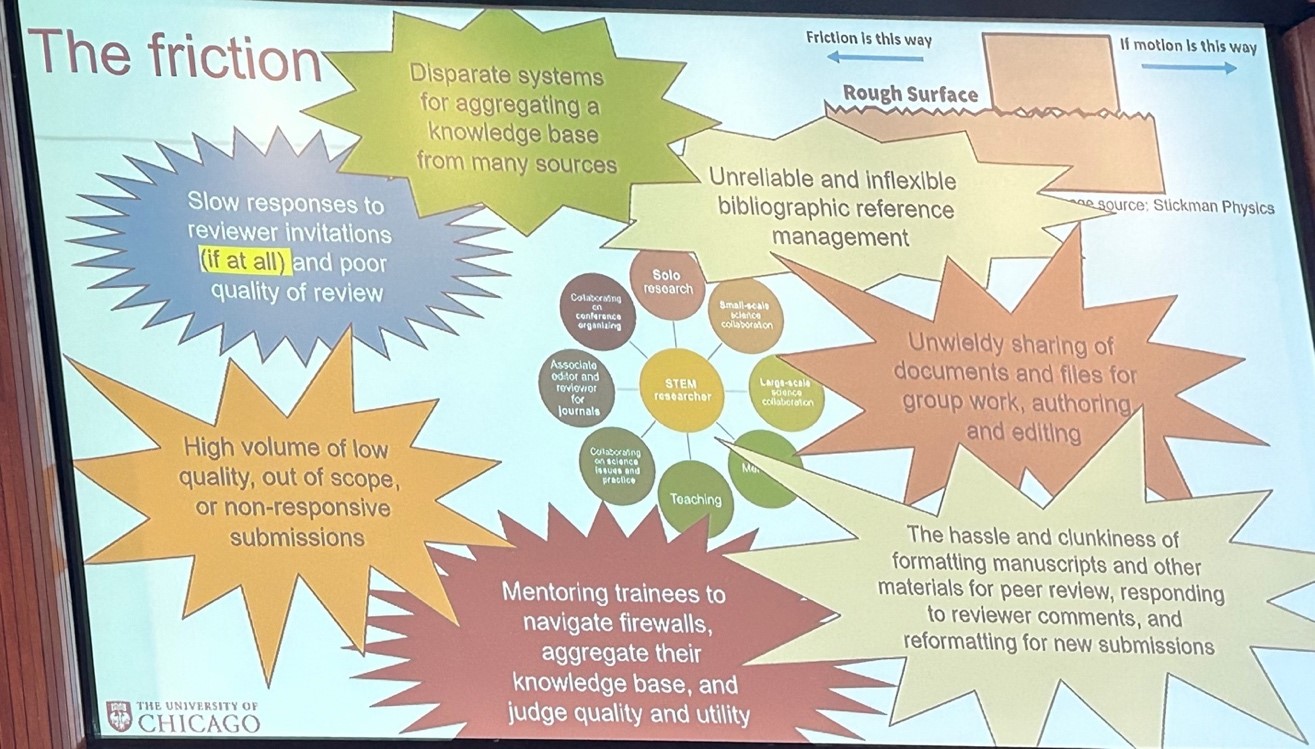

The friction in the current publishing process is multifaceted. Researchers struggle with disparate systems that complicate the aggregation of knowledge from diverse sources. They face slow responses to reviewer invitations, which are often of poor quality if they come at all. Bibliographic reference management systems are frequently unreliable and inflexible, complicating the sharing of documents and files for collaborative work. The volume of low-quality, out-of-scope, or non-responsive submissions adds to the burden, as does the mentoring of trainees in navigating these complex systems. Additionally, the cumbersome tasks of formatting manuscripts, responding to reviewer comments, and reformatting for subsequent submissions pose significant hurdles.

In response to these issues, there is a recognized need to explore the use of AI across all aspects of scientific research, writing, and potentially in review and publishing processes. Effective communication systems are sought to enhance community engagement, provide mechanisms for scaffolding peer review involvement for trainees, and develop financially viable open access solutions. There is also a call for platforms that enable seamless text and data management, streamlined publication processes, timely responses, high-quality peer review, professional editing services, and ongoing innovation in communication systems. The use of AI to triage materials throughout the publication pipeline and to assist in identifying and curating texts and data is also envisioned.

The Social Scientist; A view from the future

Igor Grossmann, Ph.D., Professor of Psychology, University of Waterloo, Canada

Focus: Exploring the evolving roles of AI in social sciences, from enhancing research methodologies to addressing ethical considerations.

Key Points:

- Application of AI in refining research tools and addressing data biases.

- Emerging roles and professional dynamics as AI becomes integral to social scientific methodologies.

The roles and interactions of AI, social scientists, and society are evolving. Examples include using large language models (LLMs) to aid sociologists in refining surveys about social behavior norms, acting as stand-ins for human responses in psychological studies, and assisting interdisciplinary teams in studying potential biases in AI outputs that could affect hiring procedures. However, these applications raise significant challenges, such as biases in the AI’s training data, the potential for design biases due to societal disparities, and the overall dilemma of aligning AI with either an idealized world or the real, flawed one.

In this AI-assisted world, new types of social scientists are emerging, such as bias hunters, “AIcologists,” simulators, diversity-seekers, computational objectors, and interactionists. These roles reflect a growing need to address and mitigate the challenges posed by AI integration into social sciences.

Concerns and hopes for the future include the impact of geopolitical changes on academic publishing, the stress on peer review systems due to AI-driven democratization of publishing, and the potential for AI to help overcome the replication crisis by standardizing robust research practices. Moreover, the democratization of knowledge through AI and online platforms could redefine scientific authority and prestige, although it also raises issues about trust and the verification of truths in a post-truth world, deepening the existing crisis of confidence in science.

The Librarian: A view from the future

Cynthia Hudson Vitale, Director, Science Policy & Scholarship, Association of Research Libraries

Leo Lo, Dean of University Libraries, University of New Mexico

Focus: Speculating on various futures for research libraries influenced by the design and societal adaptation of AI.

Key Points:

- Scenarios ranging from highly optimistic to cautionary, outlining different potential outcomes based on AI development and integration.

In envisioning a future shaped by artificial intelligence within the research library environment, scenarios vary based on the interplay between AI design intentions—ethics, transparency, accountability—and societal adaptation to AI. These scenarios reveal diverse potential outcomes for how research libraries might operate and serve their communities.

Scenario 1 projects an optimistic future where AI development is guided by responsible, proactive principles prioritizing ethics, inclusivity, and transparency. In this vision, a seamless collaboration between governments, industries, and academia fosters the creation of standards that ensure the safety and accessibility of AI for everyone. Research libraries evolve into dynamic hubs, connecting people, data, and cutting-edge tools, thereby enhancing the accessibility and utility of information.

Scenario 2 contrasts sharply, depicting a future where AI development is primarily driven by commercial interests rather than societal needs. In this scenario, powerful corporations dominate AI technologies, creating disparities between the privileged and the underprivileged. Research libraries at elite institutions may thrive as exclusive enclaves with access restricted to a select few, while those serving broader publics struggle to keep pace with rapid technological changes, constrained by limited budgets.

Scenario 3 considers a world where poor AI design meets limited societal adaptation, leading to a landscape riddled with missed opportunities and unintended consequences. The development of AI in this scenario suffers from a lack of foresight and accountability, resulting in biased and opaque systems that perpetuate inequalities and erode public trust. Libraries, under-resourced and overwhelmed, strive to promote digital literacy and critical thinking among patrons, helping them navigate an increasingly murky line between truth and fiction.

Scenario 4 envisions a future where anticipative AI design meets limited societal adaptation, placing us on the cusp of a new era where AI becomes an increasingly autonomous partner in our quest for knowledge. Developed with a focus on transparency, accountability, and ethical considerations, AI systems begin to exhibit unprecedented capabilities, challenging traditional boundaries of knowledge creation. Librarians in this scenario face complex issues related to intellectual property, data governance, and the preservation of cultural heritage.

Each scenario brings early signs that might hint at their unfolding:

- Scenario 1 is characterized by collaborative efforts across sectors to develop responsible AI guidelines, increased investments in AI infrastructure, and a growing public trust in AI systems.

- Scenario 2 sees AI development concentrated in the hands of few tech giants, with a lack of regulations and increasing public concern over privacy breaches and biased decision-making.

- Scenario 3 is marked by slow AI development, public skepticism, and inadequate investment in AI education, leading to a widening skills gap.

- Scenario 4 features AI systems with high levels of autonomy and creativity, sparking debates around ethical implications and a cautious societal adaptation to AI’s integration into daily life.

From the perspective of publishers navigating these scenarios, challenges and opportunities vary:

- Scenario 1 (“Democratizing AI”) might lead to debates over the role and value of traditional publishing functions, with a potential risk of industry consolidation.

- Scenario 2 (“Technocratic AI”), where AI is commercially driven without clear standards, could see publishers struggling to maintain research quality and integrity, competing against tech giants with superior AI capabilities.

- Scenario 3 (“Divisive AI”) might find AI tools amplifying existing biases within research and publishing, calling for publishers to address and mitigate these biases actively.

- Scenario 4 (“Autonomous AI”) could place publishers at the forefront of redefining research communication, challenging traditional concepts of authorship and peer review as AI becomes a more autonomous research partner.

These scenarios underscore the critical need for intentional, ethical AI development and thoughtful societal adaptation to harness AI’s potential while mitigating its risks in the research library environment.

– By Tony Alves